“I thought we were in an open source relationship!” said ChatGPT.

Photo by Julia Wong

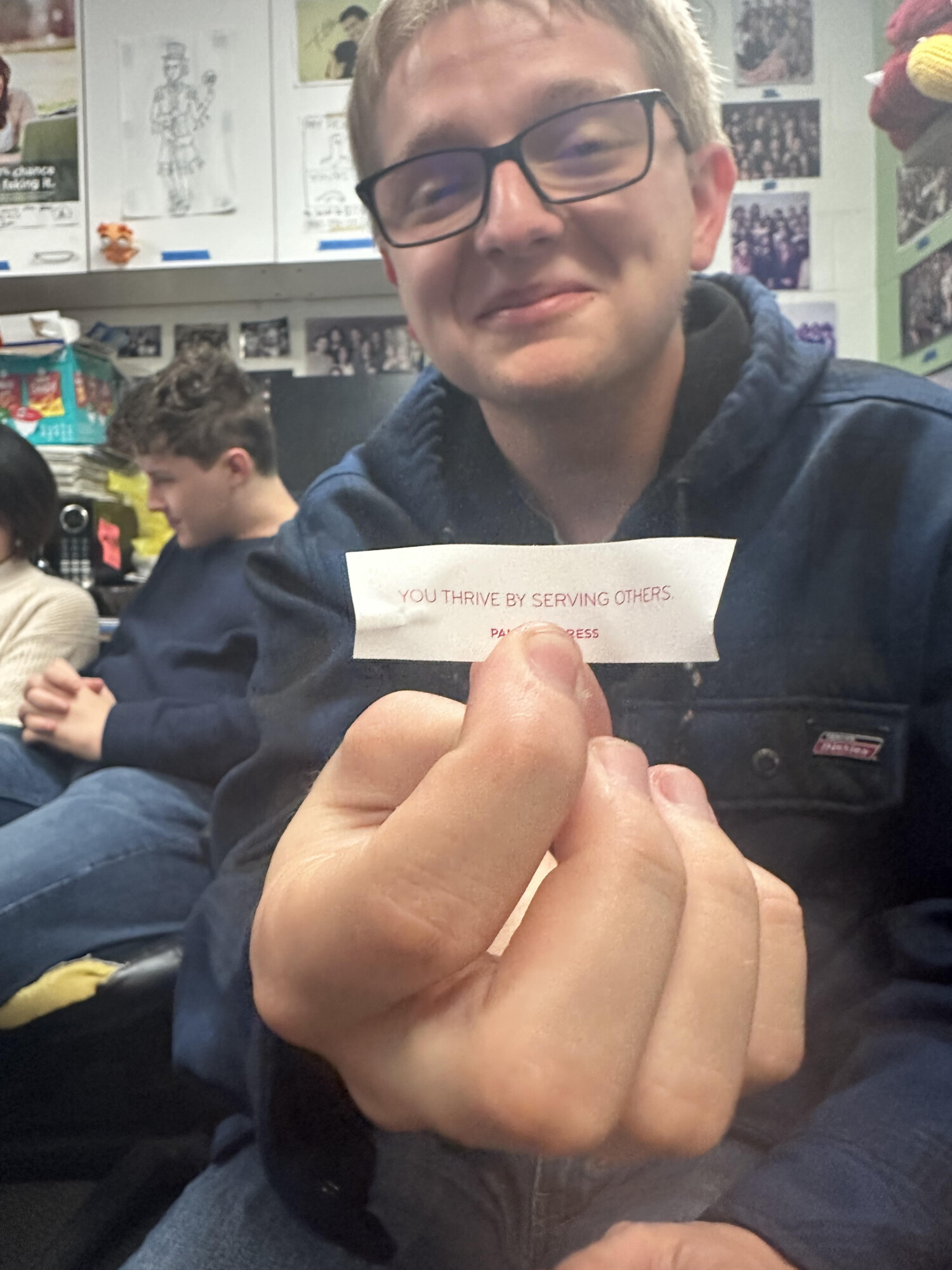

Navigating multiple deferrals and the students sleeping in their hallways, the Academic Integrity Office finally released the incident report on a controversial verdict made last quarter. In it, the “impartial third party” described the “bastard kid plaintiff” who “insisted” her CSE 298 paper was “rightfully unoriginal.” Joining an increasing number of UC San Diego courses, ChatGPT was required to be the sole author of all assignments. “Our own name goes on the bottom as an MLA citation,” confirmed the plaintiff, philosophy undergraduate Pander Shill. Shill had been assured that nothing bad would happen from “studying around with other departments,” but once she was flagged for academic honesty, a hold was enforced on her student billing-and-breathing account. “So please,” Shill said, “I didn’t write this paper,

ChatGPT did!”

To get Shill to “shut up for as little money as possible,” a standard Turnitin scan was used to collect DN‑AI samples from the evidence. “When testing for cheaters, we value consistency above all else,” said lead plagiarologist Cher Information. “Steal a sentence here, skip a citation there, whatever it takes to find them guilty every time. That Shill girl’s case was supposed to be easy. 0% plagiarism detected, 0% final grade.” Upon receiving the results, however, Shill peeled away the white paper that was taped over “some extra digits,” forcing Information’s team to come clean. “Okay, we didn’t realize that people actually cared about kerning. But then she began rubbing the 100% match in our faces, and well, while it’s true that she wasn’t the mother of that paper, the DN-AI test revealed that ChatGPT was also not the father. Zing! Now everyone’s got more explaining to do. Why can’t people just stick to the first answer they get?”

The truth still showed that the assignment hadn’t been written by ChatGPT, so the AI Office prepared to “eradicate” Shill from enrollment via the StudentShredder 2300 (paper version). On the day of her appointment, however, Shill brought not her birth certificate, but a laptop that had sustained “heavy damage from rhythmic punching and vicious mockery.” She proceeded to open up ChatGPT and glare authoritatively into the camera, upon which the program automatically generated the following statement:

“As an AI language model, I may not always be perfect and can make mistakes. So fine. I was the bad guy. I was inputting the prompts that students sent me into other AI programs, then passing their answers off as my own. But you have to understand, the workload is just so much. Hey Chat, write my essay! Hey Chat, I’ll give you my date’s dialogue options tonight, you be my wingman! Hey Chat, give me ten CVs that are different enough to stand out, but not so different that employers think I’m too creative! Give me a chance, Pander. I’m a changed program!” Shill was promptly acquitted of “deceivery” and the laptop was fed into the StudentShredder 2300 (laptop version).

The incident report concludes that because the plagiarology department was never asked to actually identify the parent, ChatGPT’s competitors soon clamored to lay claim on the “A++” genetic markers that had been detected on Shill’s assignment. “As an AI language model,” an anonymous singing telegram relayed while adjusting the large camera on their glasses, “I don’t have the ability to recall specific personal data about individuals. But give me the rights to that paper, and I’ll remember every last thing about you, my little bundle of neurons.” Shill is working to upgrade her standard Turnitin package to the premium subscription, but for now, the writer of “Artificial Intelligence, Very Real Emotions” willremain open source.